|

Turbo codes

Turbo codes

Turbo codes are the most exciting

and potentially important development in coding theory in recent years. It was

first introduced in 1993 and offers near idealistic, Shannon-limit error correction

performance. This capability has lead Turbo codes to become an emerging coding

technique for the next-generation wireless communication protocol, such as Wideband

CDMA (W-CDMA) and subsequent 3rd Generation Partnership Project (3GPP) for IMT-2000.

softDSP's

Turbo decoder core is implemented with VHSIC Hardware Description Language (VHDL)

and offers flexible interface for use in various applications

such as 3GPP, Power-line modem, Millitary comm. Wireless and Satellite communications,

and magnetic storage channel, etc. softDSP completed turbo codes satisfiying 3GPP standard and now actively

extending its application further from hard disk drives to satellite links.

Turbo decoder

The turbo

code is composed of two or more identical recursive systematic convolutional

(RSC) encoders, separated by an interleaver. The interleaver randomizes the

information sequence of the second encoder to uncorrelate the inputs of the

two encoders. Since there are two encoded sequences, in the decoder, decoding

operation begins by decoding one of them to get the first estimate of the information

sequence. This requires that the decoder has to use a soft decision input and

to produce some kind of soft-output.

There are

two well-known soft-input / soft-output decoding method, namely, MAP (Maximum

A Posteriori) decoding algorithm and SOVA (Soft Output Viterbi Algorithm). Although

the computational complexity of MAP decoding is higher than that of SOVA, for

the better performance of MAP decoding algorithm over SOVA, MAP decoding algorithm

is preferred in designing Turbo decoder. To reduce the system complexity and

gate size of MAP decoding algorithm, log-MAP decoding method was introduced

which takes logarithm to MAP decoding algorithm and thus converts multipliction

and division to addition and subtraction. By this operation, MAP decoding algorithm

is divided largely into three computational parts, that is, forward-metric unit,

reverse-metric unit, and log-likelihood unit. Both forward and reverse metric

is needed to obtain the LLR (log-likelihood ratio) output.

In case of

directly implementing these blocks, two packet size memory for storing input

(system and parity input) and forward metric is needed, which becomes an excessive

burden in implementing to VLSI. However, in the continuous decoding using sliding-window

technology, the required memory size is reduced by using the characteristics

of convolution coding. Since the path history nearly converges if we set the

length of path-history to several times the constraint length of the code, we

set the small window which has the length of path history and operate two reverse

metric processors which alternatively performs decoding and path converging.

By using sliding-window technology, it is possible to achieve a small latency

and this effect becomes conspicuous when the block size increases. Further,

very small size memory compared with normal MAP decoding can be achieved.

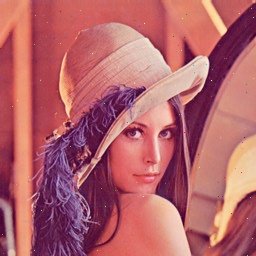

Original

and additive White Gaussian Noise added Lena

image.

First

and second iteration and you can see the image improved better and better.

|